How We Designed Our Servers at PearAI

I spent the last 2 months working on PearAI, an Open Source AI-Powered Code Editor. It's like having an expert on your codebase right next to you. We achieve this with Retrieval Augmented Generation. This is my new startup after finishing my B.S. & M.S from Carnegie Mellon and working for 1.5 years in High Frequency Trading as a Software Engineer. Here's exactly how we designed our server.

We are launching our product next week, and we needed to build out our server this last month. As we're building fully in public, here's exactly how we did it. Hopefully, this helps you design a server with scalability, resilience, and security in mind.

What's this server for?

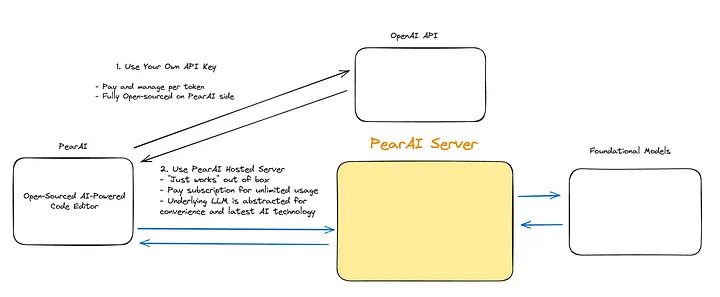

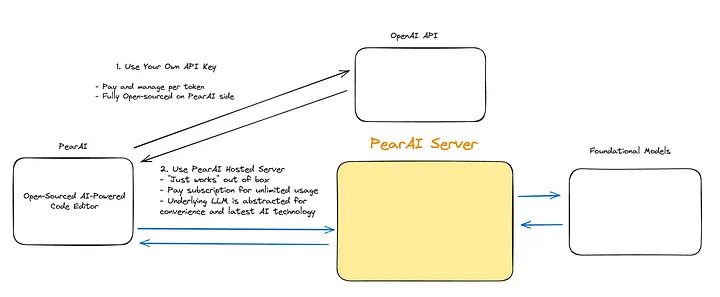

PearAI offers two different services for LLM:

- Use PearAI's hosted server: Pay subscription for unlimited usage. Underlying LLM is abstracted for convenience and latest AI technology.

- Use API key: Users self-manage and pay per token to the LLM Provider. On PearAI's side, this is open source and fully transparent. Users can also use their own local LLM.

Our Server Functionalities

- 1. Authentication

- 2. Database

- 3. Proxying

- 4. Observability

- 5. Payment

- 6. Deployment

0. Never Start From Scratch

I'm a big fan of creating my own templates and never starting from scratch again. I open source all of these, and you can find the Flask API Template I made/used for this project here: Flask Backend API Template

Edit:

DON'T USE FLASK, USE FASTAPI FOR ASYNC CAPABILITIES

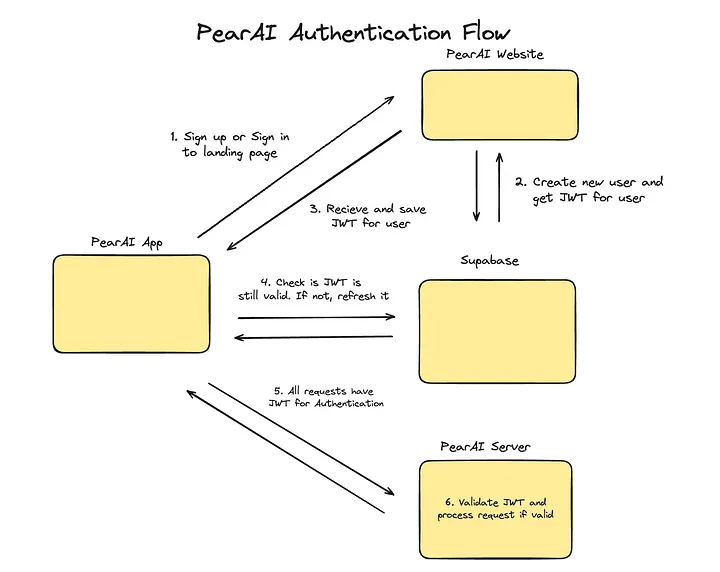

1. Authentication

We needed sign-up and sign-in functionality, as well as JWT tokens for each user. For this, we used Supabase, which handles authentication of users. This is how we are doing this:

Edit:

DON'T USE SUPABASE, USE AUTH0

Summary

In summary, this is the stack that we used and I would recommend:

- Primary language: Python

- API Framework: Flask

- Authentication: Supabase

- Payment: Stripe

- Database: Redis + Supabase (pSQL)

- Observability: OpenTelemetry + Axiom

- Deployment: DigitalOcean

Hopefully this was helpful to someone. PearAI is open source, so please help us out by starring the repo here: PearAI GitHub Repo, and consider contributing! If you'd like to use the app, join the wait list here https://trypear.ai/. We're launching next week to our first batch of users!

Also, feel free to check out my YouTube series on this, as I am documenting the entire startup journey with my cofounder FryingPan. https://youtube.com/nang88. Thanks!